NASA Improves GIANT Optical Navigation Technology for Future Missions

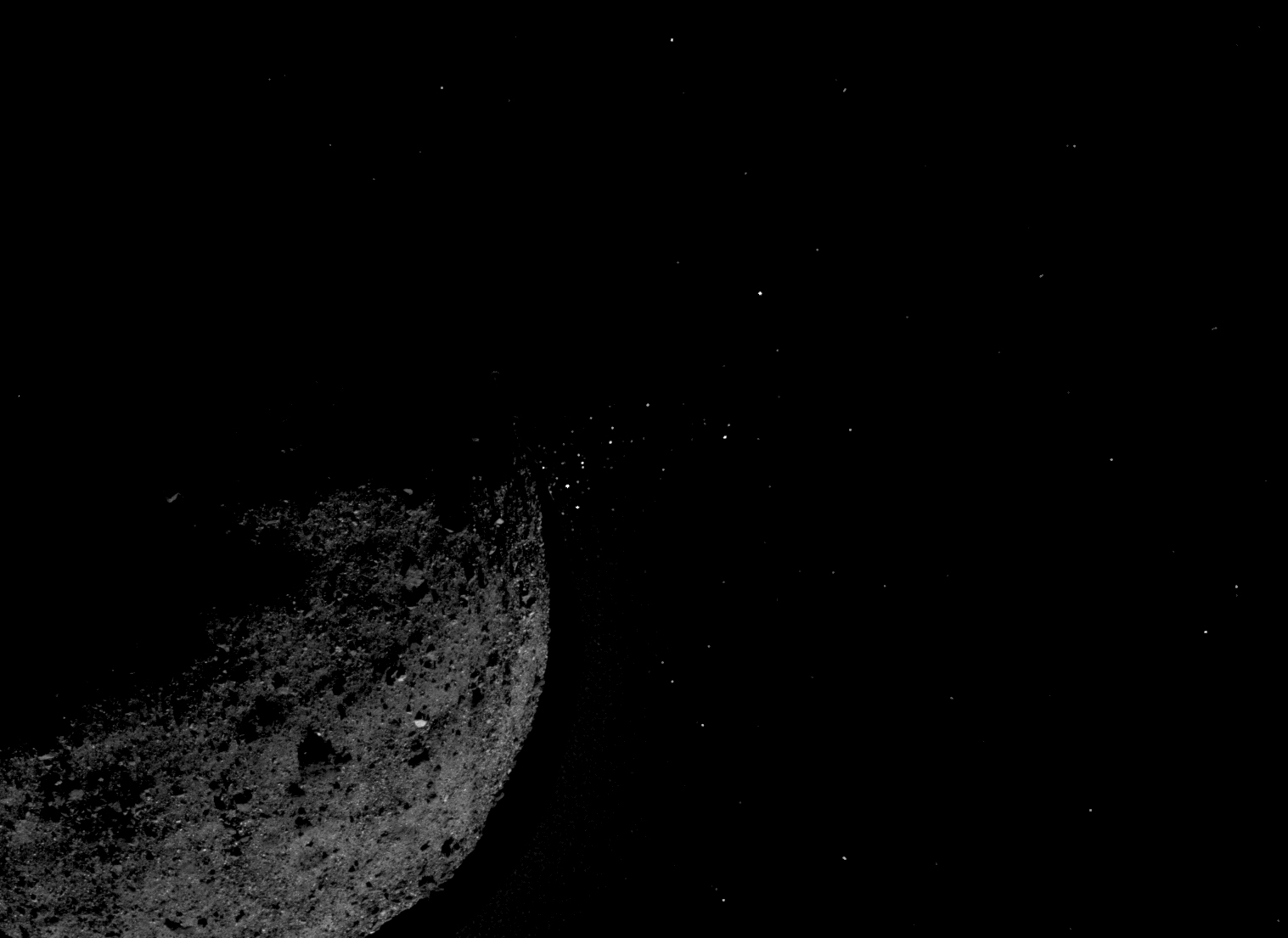

As NASA scientists study the returned fragments of asteroid Bennu, the team that helped navigate the mission on its journey refines their technology for potential use in future robotic and crewed missions.

The optical navigation team at NASA’s Goddard Space Flight Center in Greenbelt, Maryland, served as a backup navigation resource for the OSIRIS-REx (Origins, Spectral Interpretation, Resource Identification, and Security – Regolith Explorer) mission to near-Earth asteroid Bennu. They double-checked the primary navigation team’s work and proved the viability of navigation by visual cues.

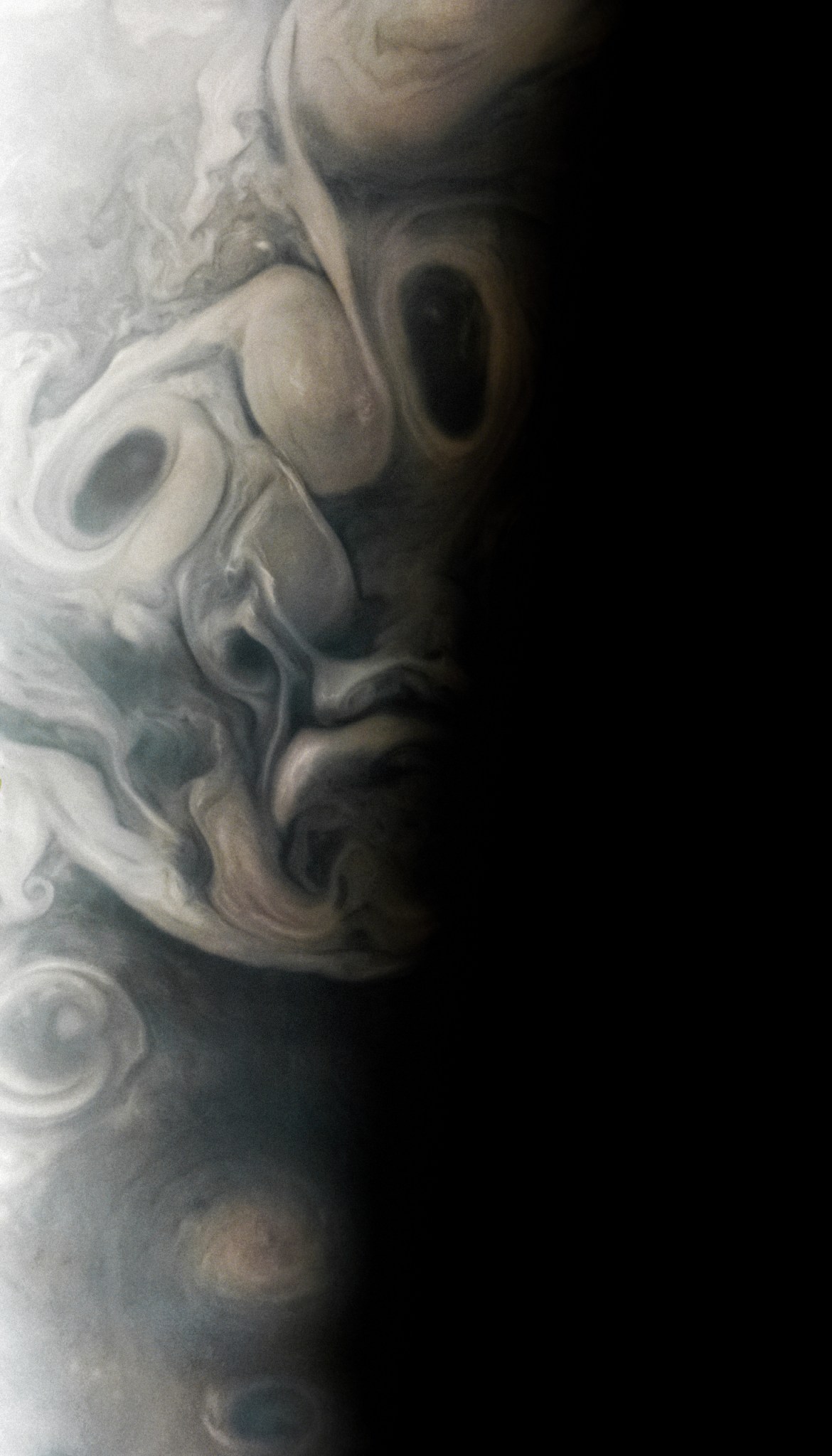

Optical navigation uses observations from cameras, lidar, or other sensors to navigate the way humans do. This cutting edge technology works by taking pictures of a target, such as Bennu, and identifying landmarks on the surface. GIANT software – that’s short for the Goddard Image Analysis and Navigation Tool – analyzes those images to provide information, such as precise distance to the target, and to develop three-dimensional maps of potential landing zones and hazards. It can also analyze a spinning object to help calculate the target’s mass and determine its center – critical details to know for a mission trying to enter an orbit.

“Onboard autonomous optical navigation is an enabling technology for current and future mission ideas and proposals,” said Andrew Liounis, lead developer for GIANT at Goddard. “It reduces the amount of data that needs to be downlinked to Earth, reducing the cost of communications for smaller missions, and allowing for more science data to be downlinked for larger missions. It also reduces the number of people required to perform orbit determination and navigation on the ground.”

During OSIRIS-REx’s orbit of Bennu, GIANT identified particles flung from the asteroid’s surface. The optical navigation team used images to calculate the particles’ movement and mass, ultimately helping determine they did not pose a significant threat to the spacecraft.

Since then, lead developer Andrew Liounis said they have refined and expanded GIANT’s backbone collection of software utilities and scripts.

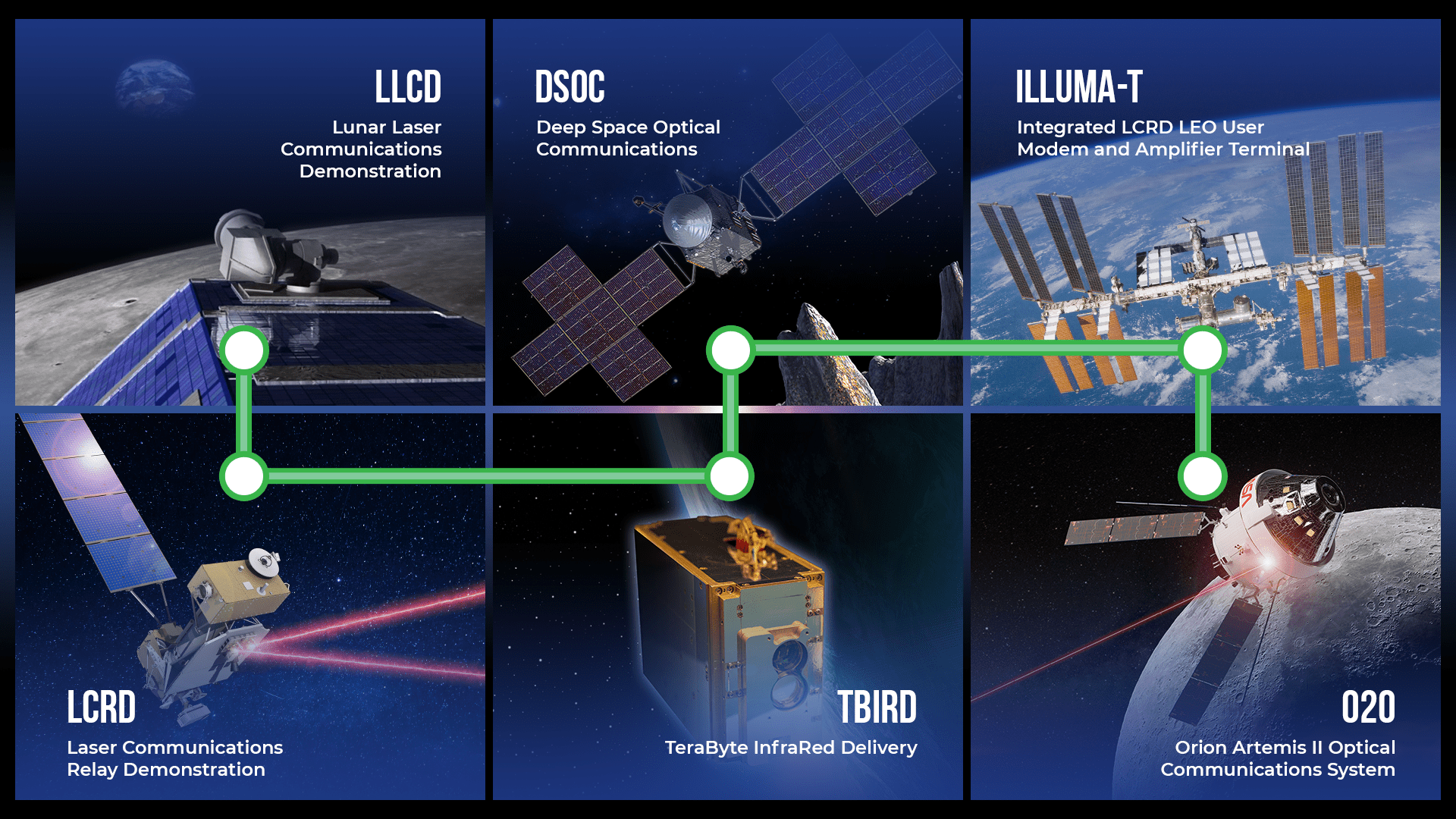

New GIANT developments include an open-source version of their software released to the public, and celestial navigation for deep space travel by observing stars, the Sun, and solar system objects. They are now working on a slimmed-down package to aid in autonomous operations throughout a mission’s life cycle.

“We’re also looking to use GIANT to process some Cassini data with partners at the University of Maryland in order to study Saturn’s interactions with its moons,” Liounis said.

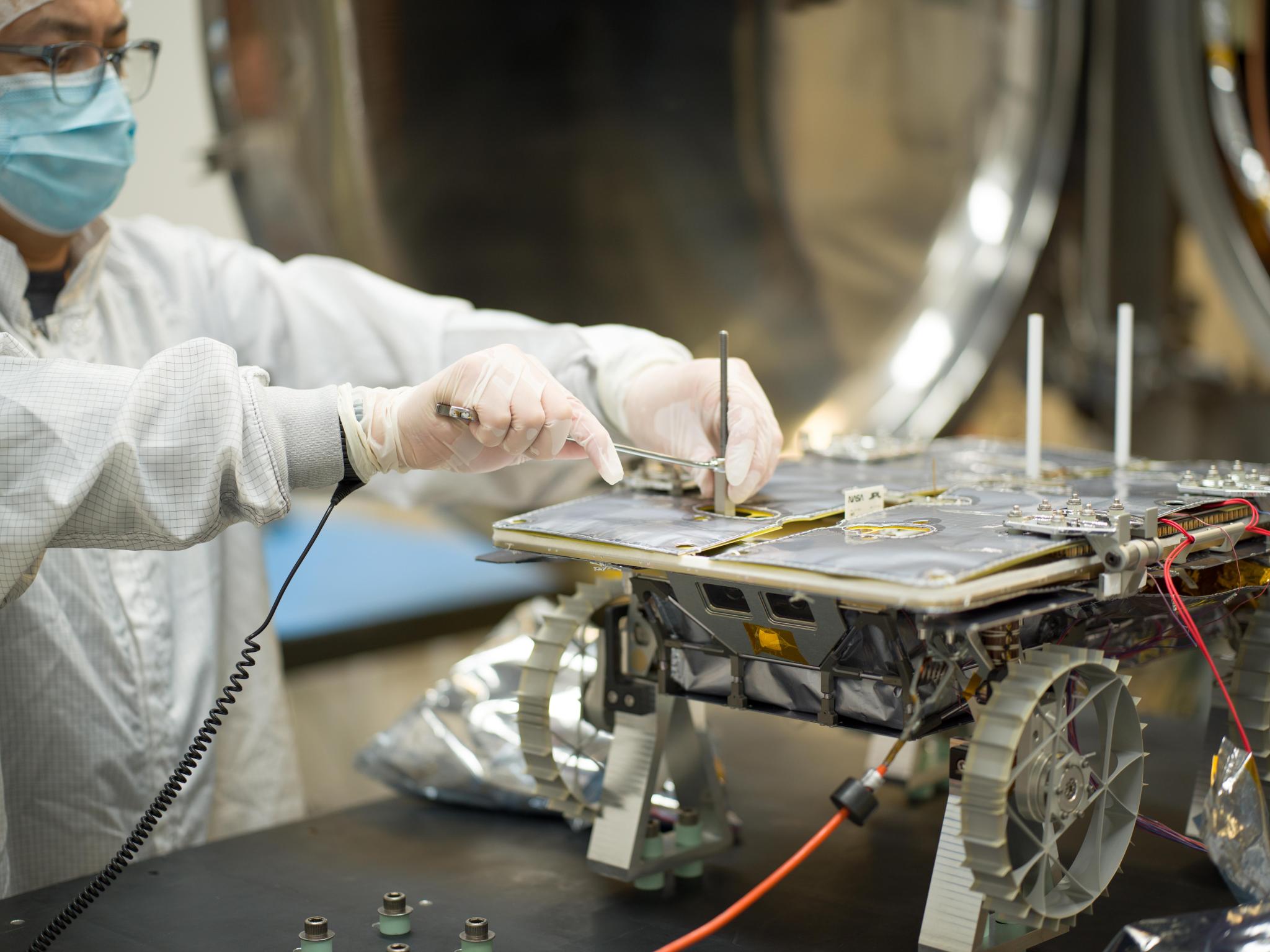

Other innovators like Goddard engineer Alvin Yew are adapting the software to potentially aid rovers and human explorers on the surface of the Moon or other planets.

Adaptation, Improvement

Shortly after OSIRIS-REx left Bennu, Liounis’ team released a refined, open-source version for public use. “We considered a lot of changes to make it easier for the user and a few changes to make it run more efficiently,” he said.

An intern modified their code to make use of a graphics processor for ground-based operations, boosting the image processing at the heart of GIANT’s navigation.

A simplified version called cGIANT works with Goddard’s autonomous Navigation, Guidance, and Control software package, or autoNGC in ways that can be crucial to both small and large missions, Liounis said.

Liounis and colleague Chris Gnam developed a celestial navigation capability which uses GIANT to steer a spacecraft by processing images of stars, planets, asteroids, and even the Sun. Traditional deep space navigation uses the mission’s radio signals to determine location, velocity, and distance from Earth. Reducing a mission’s reliance on NASA’s Deep Space Network frees up a valuable resource shared by many ongoing missions, Gnam said.

Next on their agenda, the team hopes to develop planning capabilities so mission controllers can develop flight trajectories and orbits within GIANT – streamlining mission design.

“On OSIRIS-REx, it would take up to three months to plan our next trajectory or orbit,” Liounis said. “Now we can reduce that to a week or so of computer processing time.”

Their innovations have earned the team continuous support from Goddard’s Internal Research and Development program, individual missions, and NASA’s Space Communications and Navigation program.

“As mission concepts become more advanced,” Liounis said, “optical navigation will continue to become a necessary component of the navigation toolbox.”

By Karl B. Hille

NASA’s Goddard Space Flight Center in Greenbelt, Md.

Powered by WPeMatico

Get The Details…

Karl B. Hille